eMuLE

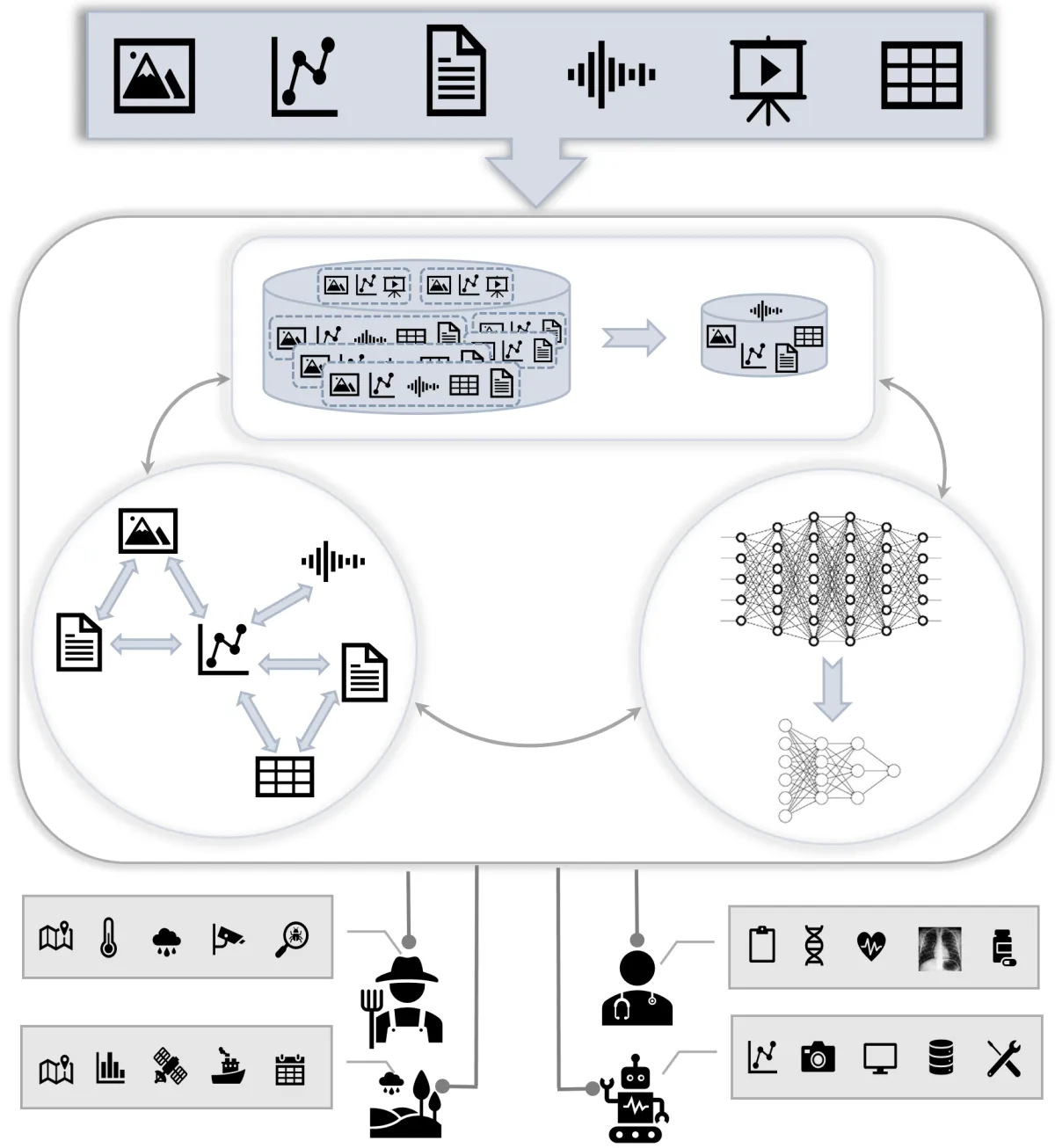

Efficient and Model-Efficient Deep Learning for Multimodal Data

Group Lead

General aim

We advance AI for multimodal learning by making deep learning more data- and model-efficient.

Focus of research

Large neural networks such as Transformers achieve outstanding results on various data forms but often demand large training datasets and high memory and compute resources. The EMuLe research group investigates how to optimize training efficiency' to minimize the required data volume andmodel efficiency' to significantly reduce model size without degrading outcomes, enabling scalable AI for real-world multimodal applications. We develop data- and model-efficient frameworks for multimodal learning, with a primary modality of time series and temporal data. The team consists of three PhD students working on the following workpackages:

- WP1: Data-efficient multimodal training strategies

- WP2: Model-efficient multimodal learning

- WP3: Multimodal graph-based modeling